How to Use Hand Tracking in VR and XR Applications – Guest Post by Ultraleap

Ultraleap XR and VR hand tracking

Our hands are one of the primary ways we interact with the people and the world around us. Given how familiar we all are with using our hands, being able to use them directly in VR is critical to lowering the barrier to entry.

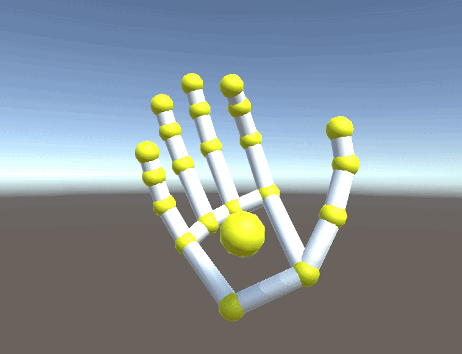

Ultraleap, as a company, has focused on providing the most intuitive and natural way of using one’s hands within VR and XR software. Using proprietary infra-red based tracking, our technology can help map out the exact location of a user’s hands, right down to the individual fingers and joints, with ultra-low latency and industry-leading accuracy. With no wearables or additional devices, hand tracking is the most intuitive way that we can possibly interact within the oncoming metaverse.

![]()

VR hand tracking and Varjo

Being at the high-end of the spectrum of solutions for VR/XR hand tracking, Ultraleap technology has proven a perfect partner for Varjo hardware. Ultraleap’s 5th generation hand tracking technology, known as Gemini, is incorporated into both the Varjo XR-3 and Varjo VR-3 headsets. Thanks to fast initializing, 2-hand tracking, that works even in challenging environments, this integration allows Varjo’s enterprise customers to unlock the full range of hand tracking features (interaction and gestures) and beyond with innovative use cases such as a brand-new way of tackling hand occlusion (Varjo skeletal masking).

Recently members of the Ultraleap team visited the Varjo HQ in Helsinki Finland to take part in a webinar where we explored the full potential of hand tracking for virtual and mixed reality. We looked at some of the fascinating use cases where the technology is already in use by Varjo customers. To see a full recording of that insight session, click here.

At the end of the webinar, we got fantastic questions from the live audience. We couldn’t answer all at the time, but we have compiled some of the best ones into a handy list for you below.

Contributing to responses:

Kemi Jacobs, Senior QA Engineer, Ultraleap

Chris Burgess, XR Product Manager, Ultraleap

Max Palmer, Principal Software Architect, XR Team, Ultraleap

Ferhat Sen, Solutions Engineer, Varjo

Jussi Karhu, Integrations Engineer, Varjo

XR and VR hand tracking Q&A

How far out are we on using haptics with hand tracking?

Chris: We already use haptics and hand tracking together and we are continually working on these technologies.

Question for the UL team, what range of vertical detection do you see being possible where the accuracy of hand detection is still “usable” without the hands being visible in the HMD? Use case would be having a natural hand gesture with arms more naturally down under your view, instead of directly in front.

Kemi: This is not something that we’re currently measuring but as hands can be detected at the edges of the HMD FoV, then tracking may be useable if hands are not visible. Part of this will be affected by the design of the applications used.

Do you develop medical content for your technology? Or is the model to use your device and find a developer?

Ferhat: Varjo provides and Ultraleap provide the hardware and software, and there are certainly 3rd party software providers across many domains and industries, medical included. You can check out Varjo.com to see which off-the-shelf software is already compatible with Varjo devices, and both Varjo and Ultraleap teams are on hand to give guidance when considering developing custom software experiences.

What needs to improve with respect to hand tracking, in order to have it working accurately and reliably in flight simulators that are operated completely in VR, when operating switches and buttons. At the moment it is still pretty “rough”.

Chris: In Unity and Unreal, we can auto-scale the artwork of a hand to the real-life hand in question, and that is critical to be able to accurately press what you are looking at. As it stands, with the right setup, you can get highly accurate hand tracking with Ultraleap, and of course, we are constantly developing the technology to become even more seamless as time goes on.

How does the IR light tracking work outside in the sun?

Kemi: Ultraleap hand tracking works well if you are in a space with sunlight coming in. However, there are limits and direct sunlight may affect the performance due to less contrast between the hands and the background.

Does the exo-skeleton representation of the model represents the actual size of hands or is the size of hands preset? I have small hands and tracking gloves always make my hands bigger

Max: At Ultraleap, we try to accommodate all different aspects of people’s hands and that includes hand sizing. If you turn on hand scaling, we use procedural hand models, that should scale to the size of the user and provide you with a better experience.

Do the LiDAR sensors contribute to VR hand tracking? Or is it mainly IR?

Jussi: It is mainly infrared. In Varjo software, there is a possibility to use depth occlusion (LIDAR based) to occlude hands in front of virtual objects, but this is a different technology to that of Ultraleap’s IR-based VR hand tracking technology.

Non-hand tracking related question: We are looking for the “Video-See Through Material” for Unity, so that the material gets transparent. We can’t find it. And an unlit material using RGBA(0,0,0,0) material is not working. How can we do it?

Max: I would suggest that Varjo add such a material to their plugin – for all render pipelines – because in order to do this myself, I had to modify an unlit shader to be able to create it.

We’ve seen in real use-cases how Ultraleap Gemini has some occlusion issues (eg. if the hands are partially occluded they aren’t detected, or when they overlap the tracking fails). Does the integration of Ultraleap with Varjo’s depth sensors significantly reduce these issues?

Chris: The hand tracking sensors and depth sensors are independent and the depth data is not used by the hand tracking processing/service.

How many cameras does such a VR headset need to get optimal hand tracking?

Kemi: The Varjo XR-3 and VR-3 have two cameras on the headset and the wide FoV means that you can get optimal hand tracking.

Is this applicable for Varjo VR-2 or just VR-3 and XR-3?

Jussi: The Varjo XR-3, VR-3, and VR-2 Pro currently have Ultraleap’s hand tracking technology integrated.

I understand showing the virtual hands in the virtual environment, but is it possible to show real hands in the virtual environment? For example, using XR-3 in a completely virtual computer-aided design (CAD) environment, and the video cameras detect the hands (and arms, legs, and rest of the user’s body) and overlay that in the virtual environment.

Ferhat: Yes technically possible, two ways to do it: One would be if the CAD software is integrated into the XR-3 you could just enable it by clicking it in the CAD software. Secondly, you can use the Varjo Lab Tools application available for developer.varjo.com. This application is a great way to be able to see your hands overlayed on top of a virtual environment, even if the software you are using does not support it.

Oculus´ hand tracking cannot track one´s hands merging together (like if ones shakes his own hands for example), can you implement that aspect?

Kemi: Ultraleap hand tracking allows for close interaction between individual fingers, but there is a limit, and tracking can still be lost at certain angles and with certain hand positions. However, the use of IR means that these potential limitations are likely to be very minimal.

Is there any way to differentiate another user’s set of physical hands if they are brought into the headset’s view? Meaning is a set of hands tracked and recognized so that a third hand brought into the view won’t confuse the tracking (is there way to “tag” a pair of hands based on its shape etc)? (Just in case there would ever be a use case for that kind of scenario, I know it is a bit silly to push more hands into the view in general.) Also what is the tracking range (min-max), how fast does the tracking deteriorate the further one pushes their hands? Thinking about users with really long arms.

Max: The tech is good at sticking with the primary user’s hands. It is possible to trick the system, but if the primary user’s hands stay in the foreground compared to the additional hands, it is unlikely to false trigger. The tracking range is between 10 cm to 75 cm, preferred up to 1 m maximum.

So the hand tracking takes into account head movement which you have just mentioned – as an academic researcher I am interested in getting the hand tracking data in conjunction with the head tracking data is this possible? (I know eye-tracking data can be collected via the Varjo Base)

Max: You could log the Hand Frame data as it comes in using a script. Alternatively, the VectorHand class is a more lightweight class intended to serialize hand data across a network – it can also be converted to a compressed byte representation using FillBytes (but that may reduce precision). Note, a VectorHand can be constructed from a Leap Hand (found inside the Frame data structure). There are some useful scripting references – including how to access hand data, on the Ultraleap docs site.

There will be an update in the future for the Linux SDK for Ultraleap/Leap-motion? I’ve made some bindings in Lua to use the LM device with awesome-wm.

Chris: We are currently working on a Linux build and have an early preview ready – if you’d like to be considered for early access please let us know by emailing support@ultraleap.com with an outline of what you are looking to build and why, and we’d be happy to consider your application.

What aspects of this VR hand tracking experience are you going to improve in the near future?

Chris: We make early releases of features in our Unity Plugin that we are experimenting with in a Preview Package. This gives our users insights into areas where we are looking to improve the experience. Once we have proved them out in Unity, we then look for feature parity with Unreal.

Is there any way to capture the hand movement data for export? For instance, could you record a general metric for how users are moving their hands (e.g., fast movement versus slow movement)?

Jussi: Currently we don’t export the hand tracking data directly from Varjo Base (like what is possible with VR eye tracking data). Yet. But there are separate applications that can make this possible.

How does certain clothing (IRL) affect the reliability of VR hand tracking – gloves – military sleeves, heavy jackets etc.

Kemi: Hand tracking works with sleeves/jackets, but if sleeves occlude a large portion of the hand then performance will decrease. Hand tracking also works with gloves but it will depend on how the material looks in infrared, for example, cotton, rubber and nylon work, but black leather or reflective material will have reduced performance.

Do you support the UIElement or newest Unity UI system?

Max: No, not yet.

Are there plans to support two or more leap devices at the same time? Currently, only one leap controller is supported, but it would be useful to place additional leap controllers in places which are not visible or occluded from the Varjo Headsets Ultraleap view.

Chris: We are currently working on a way in which our users can position multiple Ultraleap cameras, pre-configured to work in any of the 3 supported orientations, attached to a single Windows machine. This will reduce the issues that are caused by a user’s hands being out of range of the headset. A version of this for Varjo headset users is on our roadmap but we can’t commit to a release date at this moment in time.

What is the process for creating and training the system for new or custom hand gestures (for Unreal)?

Max: Right now there isn’t a system for training gesture recognition (machine learning-based) but Pose Detection components are provided which can be combined using a Logic Pose Detection component to recognise more complex gestures. See this blog post for more info.

Are all of those gestures/features currently available in Unreal as well?

Chris: Yes. We build everything in Unity first and then port this functionality over to Unreal.

What headset is used for this mixed reality thing?

Jussi: The Varjo XR-3.

With mixed-reality, I assume the mask mesh would be based on a “bare” hand. Can we customize that mesh to be thicker to reveal a real-world gloved hand?

Max: There are options to set various offsets on the scaled hand mesh in the Unity plugin, under the fine-tuning options section of the Hand Binder script.

We’re most interested in making a UI that wraps around a user’s wrist like a large-screen watch. What are the current limitations or considerations with two-hand interactions that we should be thinking about? ie: Should buttons still be offset from the body?

Chris: Occlusion and two-handed interactions with Ultraleap hand tracking are not affected like other solutions can be. This means that you can easily add UI that wraps around a user’s wrist – however, we would recommend offsetting from the body simply because it makes your UI more robust.

This pass-through mechanism seems really cool and simple to use! Another thing I didn’t catch earlier is how does recording the gestures work (other than the basic ones)? Is there a ready-made component in the SDK for that or do you need to write a script to go through the bone positions and compare them?

Max: There are various scripts for gesture recognition in the DetectionUtilities folder in the Core module (for Unity). These can be combined to define the characteristics of the hand pose to detect.

Is it possible to integrate the Ultraleap hand tracking into the Microsoft Flight Simulator or X-Plane? (Not sure if they use Open XR?) The perfect integration would be Varjo XR-3 & and to operate the cockpit buttons with Ultraleap Hand Tracking instead of game controllers.

Max: This is not something we are actively pursuing. However, there are people who are attempting to independently add support for our tracking in MSFS – you can read more on their progress in this thread.

Now that more and more investments are coming in for VR, AR and XR, will Moore´s Law start to work for VR as well?

Chris: We expect the development around XR to increase hugely over the next few years and we’re excited to be a part of it! Moore’s Law is for a specific industry but something similar could happen to XR hardware too.

Have you found any good alternatives to haptics for common actions like tapping a button? Ex using sound, visual feedback etc instead. What works best?

Max: Sound generally proves beneficial when not looking at the item, something more prevalent in VR. It’s easier to provide nuanced transitioning info as it’s easier to distinguish. People tend to notice mismatches faster. You generally need some form of state change when the “action” occurs, regardless of what the button itself is doing. Having no states, or having some but not all (hovers but no select, pushing but not complete, etc) will confuse the user.

Are there plans to have better out-of-the-box support for multiple Ultraleap devices? Right now it’s mostly bare bones. Will it have a greater focus for Ultraleap in near future?

Chris: We are currently working on a way in which our users can position multiple Ultraleap cameras, pre-configured to work in any of the 3 supported orientations, attached to a single Windows machine. This will reduce the issues that are caused by a user’s hands being out of range of the headset. A version of this for Varjo headset users is on our roadmap but we can’t commit to a release date at this moment in time.

Can hand tracking rotate a knob on a cockpit control panel?

Chris: Yes, however the size of the knob will be important. The smaller the knob, the more likely that the hands will fully occlude it, so please bear this in mind.

In regard to haptics at Ultraleap, will the same philosophy of making your experience and knowledge freely available to developers continue?

Chris: We’re not too sure what you exactly mean here but our research and academic teams are well embedded into the haptics community and that will not change. If there’s a specific question you have regarding knowledge, please get in touch with us: support@ultraleap.com

Is hand tracking planned for the VARJO AERO used in flight simulation

Chris: At this moment in time, Ultraleap hand tracking is only fully integrated into the VR-3 and XR-3. However, users can attach an external Ultraleap camera to an Aero today, running it via a Windows PC, so that it works with hand tracking.

Is there a contact for academic researchers, varjo and/or ultraleap? Asking for my own research

Chris: Please use hello@ultraleap.com as well as hello@varjo.com and someone will get in touch with you

How hard is it to make the hands/fingers collision objects that can deform physics objects in the scene? We saw the hands push blocks, but are the individual fingers complex meshes?

Max: The fingers, from a physics perspective, are a set of colliders. The way the interaction engine supports hand interaction with objects is a combination of ‘normal’ contact and soft body interactions. If you have a method of deforming meshes based on collider collisions then that could be an option. It’s not something we have an example for.

Can you please add back in the ‘Hand Pose Confidence’ value, which was removed from old version of the Ultraleap SDK 🙂 That would be so great!

Chris: We don’t have current plans to add this back in but if you can contact support@ultraleap.com and tell us why it’s so important to your work, we can review this.

Will there be support for multiple cameras to be used for better hand tracking data and to avoid losing hand tracking when one camera isnt looking at the hand.

Chris: We are currently working on a way in which our users can position multiple Ultraleap cameras, pre-configured to work in any of the 3 supported orientations, attached to a single Windows machine. This will reduce the issues that are caused by a user’s hands being out of range of the headset. A version of this for Varjo headset users is on our roadmap but we can’t commit to a release date at this moment in time.

Is latency an issue for VR hand user interfaces within a VR simulator environment? i.e., training for emergency procedures on a flight deck where speed is important.

Chris: Ultraleap hand tracking deals with latency very efficiently meaning fast-moving hands are not an issue for our hand tracking.

Is “head”, not eye-tracking, data possible in the Varjo?

Max: The headset provides head pose data which is accessible in the game engine.